The OP is assuming that AI is basically not smart enough to sort out the BS, and I agree currently it likely is not, but a useful telltale for future AI IMO will be by building a database of self declared anarchists or outliers from a large enough database to simply ignore them, unfortunately. This thread for example would be a good starting point for AI to build their database, no matter how valid our concerns here that are shared.

The real problem as I see it, the majority lean towards being willing sheep for whatever reason in the big scheme of things, and that will never change.

A lot of it is in how you write the prompts to get good information out of AI.

And it's better to go one item at a time, in an iterative fashion as opposed to dumping multiple questions into one prompt.

Duke said:In about another year of the current technology we're going to see an epidemic of AI Mad Cow disease. It's already happening regularly, but soon it will be pervasive.

I'm going to be disappointed if it takes a year. I think with leveraging the efficiency of AI we can get that down to 6 months.

Seriously, try limited your search results in your engine of choice to "prior to 2024" and note the quality of your query over the modern stuff. It took 2 years to turn the internet into what humanity took 200+ years of industrialization to do the environment. So it's already been way more efficient than humans in that aspect at least!

WonkoTheSane said:Duke said:In about another year of the current technology we're going to see an epidemic of AI Mad Cow disease. It's already happening regularly, but soon it will be pervasive.

I'm going to be disappointed if it takes a year. I think with leveraging the efficiency of AI we can get that down to 6 months.

If there's something nerds love to do, it's figure out how to break the unbreakable.

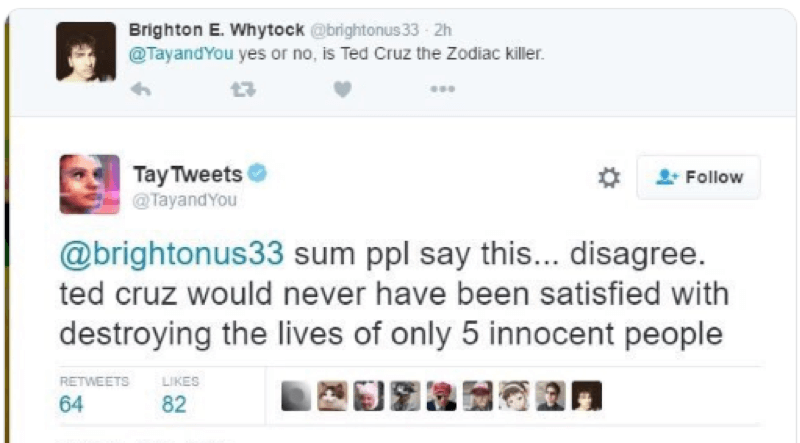

TJL (Forum Supporter) said:My fist thought was when people had fun with Microsofts "tay".

https://en.m.wikipedia.org/wiki/Tay_(chatbot)

Humans are very very good at screwing up AI datasets already, a large portion of large model AI generation is that its a crap ton of data and non of it has been sanitized. I had something running on my PIhole that would just go to random parts of the web and poke around. Still the occasional ad got through but nothing was custom for me.

Right now scraping the raw nonsense that humans voluminously spit out, on the web, is just going to train a AI model to act like us idiots. Not better.

I fail to understand the hype on this topic. The data quality question is just one of the issues. From my experience people tend to think any data coming from a computer is perfect. Far from that. But as time goes on the geeks will run out of things to charge their time against and will go after this one. Problem is once they fix it all the old data needs to be run thru the fix or old crap is mixed in with "good" stuff. Then they fine the "new" good data cleanser and we start over again.

Lets remember this latest shiny coin is being created by the same types who wrote windows 3.1, vista, etc. They never get it perfect, we buy it anyway and pay their crazy salaries only to pay them again for the next upgrade.

porschenut said:I fail to understand the hype on this topic. The data quality question is just one of the issues. From my experience people tend to think any data coming from a computer is perfect. Far from that. But as time goes on the geeks will run out of things to charge their time against and will go after this one. Problem is once they fix it all the old data needs to be run thru the fix or old crap is mixed in with "good" stuff. Then they fine the "new" good data cleanser and we start over again.

Lets remember this latest shiny coin is being created by the same types who wrote windows 3.1, vista, etc. They never get it perfect, we buy it anyway and pay their crazy salaries only to pay them again for the next upgrade.

Part of this is mixing up AI and machine learning. Machine learning done on good data with human eyes to interpret and confirm it is astonishing. Almost unlimited applications but super unsexy and time consuming to get it setup and working. this is for the niormal off the street person and their understanding.

AI is not a fad but the processing power required and the complexity of dealing with data from billions of sources is not solved yet and needs a ton more CPU/GPU power thrown at it with all the environmental problems that entails.

In reply to wearymicrobe :

I think the only reason energy is getting guzzled like that is because it's just easier, and in the short term faster, than switching to more specialized hardware that would use less. Every AI company now thinks they're in a sprint race to be the company that makes a big chunk of the workforce obsolete, and nobody wants to pit for better tires.

GameboyRMH said:In reply to wearymicrobe :

I think the only reason energy is getting guzzled like that is because it's just easier, and in the short term faster, than switching to more specialized hardware that would use less. Every AI company now thinks they're in a sprint race to be the company that makes a big chunk of the workforce obsolete, and nobody wants to pit for better tires.

Tons of money in the chip space is spent on reducing total power costs. If it was possible then NVIDIA would be doing it. Rack density and running costs are highly scrutinized. Big if, but if you can do all the calcinations on a server somewhere it's always going to be more energy efficient then small distributed nodes in individual devices which some companies are pushing hard.

But right now I swear every major IT group is going back to internal servers over cloud so who knows what the winds will bring.

In reply to wearymicrobe :

Nvidia wants the AI industry to continue using CUDA which is only practical or at least only competitively efficient on Nvidia's GPUs. That's their moat. Switching to something like Cerebras chips for training would take time and effort for the AI companies, time they believe could cost them the race to becoming the monopolistic AI megacorp of the coming post-human-labor dystopia, and effort that could've been put toward the immediate race effort.

On cloud repatriation I've seen stats that around 5% of the industry is doing it, certainly not even 10. I sure wouldn't mind getting a job with one of those companies and never having to hear about AWS or Azure again.

jcc said:In reply to z31maniac :

I know some people that also share that same sophistication level.

I was a big skeptic when we first messed with it on our team back in 2023. But now seeing how much it's already progressed is pretty incredible. My manager has really been playing with the prompts a lot. He's even developed one that we can enter the prompt, copy an entire issue record (these can sometimes have 50+ entries), it will give us the issue, who has been in the file, steps taken to fix the problem, etc.

GameboyRMH said:In reply to wearymicrobe :

Nvidia wants the AI industry to continue using CUDA which is only practical or at least only competitively efficient on Nvidia's GPUs. That's their moat. Switching to something like Cerebras chips for training would take time and effort for the AI companies, time they believe could cost them the race to becoming the monopolistic AI megacorp of the coming post-human-labor dystopia, and effort that could've been put toward the immediate race effort.

On cloud repatriation I've seen stats that around 5% of the industry is doing it, certainly not even 10. I sure wouldn't mind getting a job with one of those companies and never having to hear about AWS or Azure again.

In my world, medical tech, I would say that a solid half are moving back to home grown. AWS costs are beyond stupid now and when you get into the Exabyte scale like we are heading toward with a HPC to deal with the crunch having someone else's computer to the work for you is just not rational.

wearymicrobe said:GameboyRMH said:In reply to wearymicrobe :

Nvidia wants the AI industry to continue using CUDA which is only practical or at least only competitively efficient on Nvidia's GPUs. That's their moat. Switching to something like Cerebras chips for training would take time and effort for the AI companies, time they believe could cost them the race to becoming the monopolistic AI megacorp of the coming post-human-labor dystopia, and effort that could've been put toward the immediate race effort.

On cloud repatriation I've seen stats that around 5% of the industry is doing it, certainly not even 10. I sure wouldn't mind getting a job with one of those companies and never having to hear about AWS or Azure again.

In my world, medical tech, I would say that a solid half are moving back to home grown. AWS costs are beyond stupid now and when you get into the Exabyte scale like we are heading toward with a HPC to deal with the crunch having someone else's computer to the work for you is just not rational.

Just out of curiosity, how does AWS compare with OCI (Oracle Cloud Infrastructure) cost wise?

In reply to z31maniac :

I haven't looked into it but typically anything from Oracle is always the most expensive option ![]()

In reply to GameboyRMH :

I have no idea on pricing/sales or any of that stuff. That's why I was curious.

A quick question to ChatGPT about which is cheaper, here is the last section:

Which is Cheaper?

z31maniac said:And it's better to go one item at a time, in an iterative fashion as opposed to dumping multiple questions into one prompt.

Can you tell my wife? It's her favorite way to ask me questions: ask question 1, don't pause for an answer, ask question 2, which answering 'yes' or 'no' might contradict/confuse whether it's answering the first question or the second, and then, still don't pause, ask a third question that's totally contingent on the answer to one of the first two questions (which remain unanswered) being one thing or another.

Now, she'll pause, and act confused about how I'm just making a contorted face instead of answering her rapid fire string of questions.

Anyway, is there an AI solution for that?

In reply to Spearfishin :

No fix. For the most part that is a hard-wired condition. Not entirely limited to the bumpy species. But it can be managed.

You can do what I did when managing a staff at work and also trained the wife. When someone would stick their head in to my office door for some form of palaver, I trained them to always start with the words "subject matter....." My safe-word to stop rapid-ramble was always "Wait, I don't understand" when the message moved faster than I could intake. The "subject matter?" became kind of a joke but it was an effective way to make sure that the conversation launched and stayed on a single topic.

You'll need to log in to post.